Aug 03, 2009

Most of my CMS selection clients are not just looking for software. They are looking for a solution that includes software and also a hefty dose of services to configure, customize, train, and support. Typically, once we have narrowed down to a short list of products, we start looking at systems integrators that can help develop the solution. A well-executed implementation and roll-out of a marginal product usually yields better business results than a train-wreck project to implement best-fit software. You need to work with a services organization (internal or external) that can partner with you to define and develop the solution that achieves your goals.

The other day, I was talking with a friend about projects gone bad and he asked me if I ever worked on a big fixed bid project that both client and supplier felt went very well. After considering all the projects that I worked on and the projects that I hear about as an analyst, I had to say no. There was always disappointment and frustration on either or both sides (usually both). But I was able to think of many iterative time and materials relationships that benefited both sides over years of working together. I have now been out of the systems integration business long enough to say, without any personal agenda, that fixed bid systems integration work is a failed enterprise.

The best analogy that I can make is that a fixed bid contract is like marrying someone you met at a craps table on a Las Vegas vacation. I am not saying that all spontaneous Vegas marriages fail, but they definitely have the odds stacked against them. Here is why; during the sales process, both sides get wrapped up in the deal and suppress rational doubts and concerns. They force themselves to believe everything is going to work out. They force decisions and assumptions that turn into a millstone hanging around the neck of the relationship.

The supplier talks himself into believing that he fully understands all the requirements and that every detail about his approach to solving them is accurate. That is simply impossible. No matter how long the pre-sales cycle lasts, it is impossible to fully understand every requirement and bring to light every latent expectation. The client doesn't know what he wants until he sees what he doesn't want. The initial estimate may have even been trimmed down because it "looked too big." The client also assumes he fully understands the requirements and believes he is reasonably flexible about the design details. He trusts that the fixed bid contract ensures the delivery of the solution in his minds eye for the agreed upon price in the stated time frame.

After the deal is consummated, there is a short honeymoon phase where everyone seems to work together productively. This analysis phase goes smoothly because the stressful deadlines are far away and there are no concrete tests for progress. Sometimes a project can go through design without any awareness that it is horribly off course. That realization usually happens during the first development milestones when it is clear that the developer and client had significantly different understandings of the specification (if there even is one). Then things start to degrade very quickly. Both sides are stressed out and start to blame each other. Both parties feel like the other is being unreasonable. The contract that was supposed to protect everyone is turning into a handcuff connected to the most annoying person in the world. Both sides start looking for a way out.

Any successful partnership depends on both parties working collaboratively and creatively to solve problems as they come up. A fixed bid contract prevents this cooperation by making the relationship inflexible. But, you may ask, how much flexibility is realistic when a client has a limited budget and has promised a certain outcome of the project to company leadership and/or the customers? With a fixed bid contract, there is at least clear chain of blame. The business blames the project sponsor. The project sponsor blames the project manager. The project manager blames the consulting company. The consulting company blames the employees. The employees feel miserable and quality suffers. The contract provides unambiguous blaming instructions but it doesn't solve the problem. The project is delayed and other costs will be realized.

A better strategy would be to partner with the supplier to jointly design and develop the optimal solution within those budget, time, and quality constraints. Achieving this kind of solution is an iterative/incremental process. The final solution will gradually materialize as options are explored and learning occurs (see Tracer Bullets). But this kind of cooperation requires a huge amount of trust and, sadly, very few business relationships enjoy much trust at all. Especially given the perceived track record of failed technology projects.

So, how do you achieve this level of trust? It takes time. Just like it helps to date before you get married, you want to start off with some small, low risk projects. Both sides need to believe that if they work at it, the relationship will last a long time and benefit them. There also needs to be transparency and equal rewards. The systems integrator needs to be transparent about their capabilities and experience and they cannot expect a huge windfall over a single project. The systems integrator should consume project resources (budget) as if they were his own. The client should expect to pay for what he gets and not try to trick the supplier into committing to unreasonable results. As Graham Oakes says, if you do not try to the make the relationship a win-win, you will probably wind up being the one who loses.

When you are buying services, you are really buying effort — not a result. A good project team will multiply the effort you purchased with skill and experience to deliver great things (maybe even better than you originally thought you wanted). The problem is, buyers are ill-equipped to gauge skill and experience. They feel more comfortable with the illusion that they are buying tangible results. Services companies that prefer to sell results rather than effort do so by charging a high risk premium (or "value pricing") and having employees that can step up and overwork when the risk gamble is lost. So, the buyer is either overpaying or has hired an overworked team. Neither of theses options sounds particularly appealing.

If you do find yourself forced into asking for a fixed bid contract, do not assume that you can just set your calendar to the planned launch date and wait for the results. Instead, manage the project as actively as possible. Set up lots of intermediate milestones with exit criteria to test that the project is on track. If possible break up the project into multiple small fixed bid phases. For example, have a fixed bid design phase that ends with a prototype or semi-functional core of the application. Make sure that the design considers implementation budget as one of its requirements or constraints. Business owners: don't let your staff get away with throwing the systems integrator under the bus when a project fails. Make them equally accountable for the outcome of the project. If the system integrator is underperforming you should learn of it one quarter of the way into the project; not within weeks of the planned launch date. Even better, don't force your staff to come back with a fixed bid contract. Encourage the assembly of a high performing team of staff and consultants that maximizes the value of your budget.

Jul 31, 2009

As I mentioned before, I use many different social media services for different purposes. I realized that I have been unconsciously operating an elaborate decision tree of what to post where. On a whim, I tried to map it out and I found the results interesting.

A couple of notes...

-

The FriendFeed (ff) icons shows what services are aggregated into FriendFeed.

-

I am just starting to use Tumblr again as a sort of personal blog. This exercise helped me realize a use of Tumblr. For a while, I was posting things directly into FriendFeed.

-

Flickr contains work and personal items. I use it for personal pictures (mostly public) and also screen captures of software that I review (kept private).

I am wondering if anyone else operates a similar decision tree and how conscious they are of it.

Jul 30, 2009

CMSWire and Water and Stone are conducting a survey of open source CMS users and implementers for an upcoming report on Open Source CMS Market Share. I don't generally put much stock in CMS popularity contests because they tend to favor products that address the lowest common denominator requirements (that is, brochure websites for sole proprietor-size businesses). That said, I took the survey and was pleasantly surprised with the depth of information that they are collecting. In particular, I am interested to know what people consider the most important market filters and also implementation details like the length and cost of implementation. I hope they include this information in the report. I also hope that "industrial grade" implementers and customers take part so that the results show a good cross section of the marketplace. As a participation incentive, there is an opportunity to win a $100 iTunes gift certificate. How can you pass that up?

Jul 22, 2009

Web content management systems are very good at capturing, managing, and rendering semi-structured content. They give the contributor tools for controlling the organization of a web site and the layout and composition of pages. However, when it comes to strictly relational, tabular data, all those features like versioning and preview tend to get in the way. You wouldn't build a customer relationship management (CRM), enterprise resource planning (ERP), or accounting system on top of a content repository designed to stage and deploy content. There are better tools for that.

A common pattern when you want to present highly structured, relational data on a website alongside managed content is to manage those data outside the CMS and then use the CMS to organize and augment them. I have seen this pattern used for years but it didn't occur to me until Will Ezell (from dotCMS) mentioned that this was a direct application of the Decorator Pattern. Until that point, I had mainly used the decorator pattern at the object oriented class level. Since then, I have been using the phrase "use the CMS to decorate your _______ data." This description seems to resonate with people even if they are not familiar with the book Design Patterns: Elements of Reusable Object-Oriented Software

.

Here is a concrete example. Lets say that your ERP system is the system of record for your core product data: price, dimensions, materials, manufacturer, weight, distributor, availability, sku and size options. But these data are dry and not at all compelling to the consumer browsing an e-commerce site. You can use the CMS to add additional information like a description and photos of the product. You can also use the CMS to control where the product appears on the website: within a collection of promoted items on the home page; as a featured product on a department page; as part of an email newsletter. When designing the shopping experience of your customers, the features of a web content management system really come in handy. You can preview and stage the pages so you can see what they will look like. You can use scheduled publishing to make the pages go live on a specific date.

From an architectural perspective, the integration does not have to be that complex. Essentially the content items that decorate your catalog data just have to be aware of a primary key that is managed in your ERP system. Most web content management systems allow to configure a content editing form to use a database to populate a dropdown box or a more complex browsing interface. So a user might go into the CMS, create a new "Product" asset and select a Product ID from a dropdown list and then add content to "decorate" that product. Most CMS rendering engines can read from external data sources. Once you have that shared key, the assembling of content and data happens at rendering time.

This is one of those approaches that is so obvious that it is frequently ignored and overlooked. Once you are aware of this pattern and keep it in mind as an option, it becomes much less tempting to overload your CMS implementation to manage data that it was not designed to manage. The user interfaces can be optimized for specific purposes and your architecture becomes cleaner. When a content type gets complicated to manage, you should always ask yourself "am I using the right system to manage this data?"

Jul 21, 2009

Even in this down economy, finding technical talent can be extremely difficult. Résumés are worthless. It comes down to this: programming is a craft but we don't get to see the craftsmanship and creative process when we hire programmers. At least not before we narrow down the application pool of thousands to a short list of real candidates. After that, during the interview process we can do things like logic questions and programming exercises to see how the programmers solve problems.

The issue of finding talent is a very real concern not just for start-ups but also for established organizations that are selecting technology (like a CMS) that they must staff up to support. The natural tendency is to go by the numbers. Choose a technology with a "household name" like Java, .Net, or PHP where programmers are a dime a dozen. You are bound to find a needle in a haystack that big. Where this logic breaks down is that when a technology is so widely known and used, everyone seems to have it on their résumé. You can't tell if the candidate just spewed out a few hundred lines of crappy code or if they have immersed themselves in the technology to the point where they understand the core principles and philosophy. You can't tell if they are a talented craftsman and are able to learn new things. By selecting a household name (commodity) technology you have just made the haystack bigger without necessarily adding more needles. It is hard to find a Jamie Oliver by putting out a search for people who can make a hamburger.

When it comes right down to it, a language is just syntax that lets you do things like assign variables, create conditionals (if statements), and loop through lists. Learning what is available in libraries is the true learning curve when it comes to a technology and those libraries are constantly changing. So a good programmer is always learning. One of my favorite books about programming (The Pragmatic Programmer: From Journeyman to Master

) recommends learning a new language every year to expose yourself to different approaches. The best programmers love the challenge of learning a new language or framework. They read books on different technologies and practices. If I was hiring a PHP developer, I may get an even better result if I found a great Java developer who is open to working in PHP. Of course, then I would have to find a great Java developer. If I was looking for a great Java developer, I could see myself looking for people who have worked in Python, Ruby, Groovy, or even Scala. That would show that they are committed to learning new things rather than churning out code the same old way.

When you look at it this way, the relative size of the "talent pool" should not drive you to a technology choice. You want to choose a technology that has proven itself to be more than a fad so that it will continue to evolve and mature. You want to work with a language that has good resources for learning (like articles, books, IRC, and mailing lists). But you don't want a technology just because a majority of technologists claim to know it. Most importantly, you want a good programmer. If a technology is sound and effective, a good programmer will be able to learn it and be productive with it.

Jul 17, 2009

Readers of Drupal for Publishers know that Drupal has been very successful in the small to medium newspaper market. In fact, the Newspapers on Drupal group is assembling a list of modules commonly used by newspapers. This effort has been going on for some time but there has been renewed energy because the older list was oriented around the outdated 5.x series of Drupal.

There is also a Drupal distribution for newspapers called ProsePoint that bundles Drupal, many of the modules listed by the Newspapers on Drupal Group, and some other third party code. I think that creating vertical specific distributions of software is a neat idea and there are lots of great examples. Gartner has been talking about CEVAs (Content Enabled Vertical Applications) for years but it is unlikely that their commercial software vendor audience will be as quick to capitalize on the idea as open source communities.

From an open source community perspective, vertical distros introduces interesting dynamics. On the one hand, these groups definitely strengthen the bond within segments of the community who can benefit greatly by collaborating with peers with very similar needs. On the other hand, specialization of community segments requires strong central leadership to maintain common ground and prevent the different groups from becoming too self absorbed and self interested. I don't think this is a problem in the Drupal world because leaders in the Newspapers on Drupal community seem to have a very strong belief in and commitment to the Drupal project as a whole. For now, at least, this very active constituency seems to be nothing but a positive force in the greater Drupal movement.

Jul 16, 2009

When a company builds a business case for acquiring a web content management system, a key selling point is this vision of business users being capable and willing to build the website of their dreams. In this dream, the webmaster and other technical staff are replaced by a hyper-caffeinated, mind-reading, web-savvy C-3PO. The companies that really buy into this vision are usually thoroughly disappointed with the results of the implementation. As a consultant interested in his clients success, it is my responsibility to talk my more optimistic clients down to a more realistic set of expectations. In other words, I have a tendency to rain on parades.

The metaphor that I find to be the most helpful to explain the realistic role of a web content management system is that a WCMS is a web page (and RSS feed) factory. In some implementations, the CMS is designed to create micro-sites in the same factory-like manner but it is still a factory. Here are the reasons why:

-

Like a factory, a web content management system requires up front investment to set up. Even if the underlying software is free, you still need to configure it to produce the kind of pages that you want. That investment only pays off after you have produced a certain number of units (pages) so it doesn't make sense to implement a CMS to manage a 5 page website that rarely changes.

-

Factories are set up to produce a limited number of different products. As much as he would want to right now, a worker on the Chrysler Aspen production line cannot go to his workstation one morning and start building a better selling model — not the one that he saw driving down the street the day before and not the one he dreamt up as he was sleeping. All the tooling needs to be reconfigured and he doesn't have the skills to do that. In fact, most auto factories shut down for a period of time each year to set up the machinery for the new model-year. The people that do this work have a very different set of skills and permissions than the line workers. They also have the design specs that have been blessed by the management of the compay. In the CMS world this translates into content types and presentation templates that technical people usually have to work on. If you want to build a totally different sub-site with different types of content and different layouts, you need to bring in the propeller heads. That said, the design of the templates of the content types, could have allowed the content producer to select options or exercise creativity in specific areas. Deane Barker has a nice post describing these boundaries as load bearing walls.

-

Factories need raw materials and labor to produce their output. The input of a content management system is content and it has to come from somewhere. The CMS will not the make the content but it can be used to add value to the content by providing functionality that a person can use to organize and present the content to different audiences. Often people look at their webmasters as simple HTML typists but in reality they are usually much more. The good ones proof read the content that they were given. They fill in the gaps by creating or finding content that they were not given. They coordinate content from different providers. They navigate through the site from the perspective of a visitor. The "webmaster@" email address forwards complaints to their email address. The CMS itself won't do that for you. Only people can do that. The CMS will not eliminate the webmaster but it will make the webmaster more productive by taking some of the mundane mechanics out of the job. Maybe the webmaster can be a little less technical and focus more on the coordination and accoutability side of the job.

-

There is often a trade-off between flexibility and simplicity. One summer during high school, I spent an unbearable day in a factory at a machine that cut three foot long cardboard tubes into what you would see in the center of a roll of duct tape. I put the tube on an arm, pressed a button and stood back to watch the arm spin and the blades come down. Then I took the rolls off and repeated. This was a very simple machine to use, but I couldn't make a paper towel or toilet paper roll with it — not unless I had a machinist come into re-configure it. They could have made an even simpler to use machine that loaded the tubes on itself; but that would probably require more machinist time to configure. With a CMS you can simplify the tool by taking away options and control. For the non-technical user, you need to be very deliberate about what options to expose. You need to confine their creativity to small areas (like a rich text area in the middle of the page). Most CMS accommodate this by providing different user interfaces for power users and non-technical users. This would be the manufacturing equivalent of a special factory for real craftsman to make limited edition products and prototypes.

-

Machines that maximize flexibility and simplicity are achievable but at a cost. Some machines are exquisitely designed to present just the right options to the operator (in a perfectly intuitive way) and automate everything else. Getting to this point can take hundreds of refining iterations. There are diminishing returns on these refinements so it is rare that a company can make the ROI case for this level of investment. I doubt that Starbucks will ever build an espresso machine that is so easy to use that any customer can walk in and make his own grande, half-caff, soy, triple, iced americano with lemon (in a venti cup).

-

Being a factory line worker brings less satisfaction than being a skilled artisan. Like with my short career as a cardboard technician, operating a machine is extremely boring when all the options are taken away. On the upside, this lets the contributor focus more on the content (where they should be applying their creativity anyway); but if the contributors don't particularly like to write either, you have a problem. Often content contributors use poor usability as an excuse for avoiding the difficult task of creating content. If this is the case, no amount of user-friendliness will compel them to take ownership of their content. Factory workers don't just burst into the plant to voluntarily produce cars. They need to be motivated by compensation and pride over the quality of their work (what they do have control over). With a CMS, content contributors have less responsibility for layout and branding of the site but they are responsible for the words and pictures and organization of the content. The quality and craftsmanship of those aspects of the site needs to be recognized.

It is hard imagine a worse buzz-kill than to have your knowledge workers and marketing staff picture themselves as machine operators; but I have yet to talk a client out of implementing a CMS (except in cases when they already have a CMS that is working quite fine but they are struggling for other reasons). The reason why is that once you get past a certain volume of content, you can't manage it without the help of tools that take away some of the personal craftsmanship in design and functionality of each individual page (you can't manufacture a million cars without mass production factories either). Mass production of pages is a good thing because the audience wants the information, not each content contributor's own personal vision of how it should look. We tried that model. It was called GeoCities and it didn't work out that well. The sites were just awful to look at and the content was out of date.

A web content management system reduces the cost of maintaining lots uniform pages (and sub-sites). It doesn't help a company rapidly develop new concept websites. In fact, it often slows down the production of these websites — especially if the group that wants to do the innovating does not have developers who can access the CMS. Many media companies have a heavy duty web content management system for their heavy lifting (the bulk of their content on the main site) but use lighter weight frameworks (or CMSs that are designed to be more like frameworks) and custom code for their experimental sites (for example, The Washington Post and Django). But no matter what, if you want to innovate beyond the options and the text areas that were not designed into the CMS implementation, you are talking a software development lifecycle that includes development and testing and developers to do the work.

Jul 13, 2009

I was catching up on my blog reading and noticed that the Plone community has announced what features will be in Plone 4. Version 4 will incorporate 40 "PLIPs" ("Plone Improvement Proposal:" the Plone project's issue/enhancement tracking system). You can see the list of enhancements and bug fixes here. The release is expected to be completed in the end of 2009.

While, to me, 4.0 seems like a less ambitious release than 3.0, a few features caught my eye:

-

Tiny MCE replaces Kupu as the default WYSIWYG editor. From a user perspective, this is probably the most noticeable change. While a number of number rich text editors are supported through add-on modules ("Products"), Plone has been a bit of an outlier for using Kupu as the default, and best integrated editor. Plone has favored Kupu because of its simplicity and the well formed HTML that it produces. However, users tend to like all the buttons and additional formatting features offered by TinyMCE and FCK. TinyMCE is widely used as the standard editor in many open source and commercial CMSs. Plone will be in good company.

-

Cleaner handling of object references. Plone's repository (an object database) is hierarchical like the Java Content Repository. In most cases, business users like the similarity with the familiar filesystem model. However, like file systems, there is a disadvantage when you want the same item to appear in more than one place in the content tree. Like most hierarchical repository systems, Plone has developed a referencing system (like a symbolic link or a shortcut). Version 4.0 of Plone will make references cleaner and easier for non-technical users to use.

-

Delegated group management. Plone has a good system for managing groups and roles. However, to this point, only a site manager can control group membership. With this release, there can be group owners who manage membership for the groups that they own.

-

jQuery will be bundled. jQuery, the popular AJAX framework is becoming nearly ubiquitous. Now Plone will ship with it bundled into the basic install. From this, I expect to see lots of little UI optimizations in the upcoming releases. Of course this will be balanced with Plone's emphasis on accessibility.

-

Improvements to the self registration system. Plone can be configured to allow users to register to the site and be put into a pending status or become full blown members. 4.0 will have some enhancements to fine tune these configurations with more sophisticated behavior.

-

Better control over portlet visibility. Plone 4.0 will make is easier to configure rules that control when a portlet displays on page. For WordPress users, I think this is a little like Widget Logic. Drupal users will recognize this as part of the blocks framework. Hopefully, Plone will not ask the user to implement the display logic in code.

-

New default theme Plone 4 is going to ship with a new default theme based on the newly redesigned plone.org site. This will affect intranet implementations (which tend to do less custom theming work) than external website implementations.

Those are the big changes from what I can tell but I am sure that I am leaving things out. Please add any major oversights in the comments.

Jul 09, 2009

When I first got interested in open source software there was a lot of talk about the restrictions and liberties of various licenses and the risk that free-riders posed to the system. I have to admit that I never found these topics very interesting and usually referred the conversation to my colleague Stephen Walli (who is way more qualified in this area than I am — lawyers even listen to him!). For the most part, these (as well as the whole indemnification and SCO hysteria) have turned into non-issues, particularly for my clients who are users of the software and will probably never read a license anyway. Things tend to work themselves out.

But every once in a while, something interesting in the topic of licenses does pop up. You may remember I wrote a post describing how Bluenog took Hippo CMS, slapped their logo on it and sold it as commercial software. Well, they are still at it and they have even gone further as to remove any acknowledgement that they are repackaging someone else's software. The Apache Software License, which Hippo CMS uses, is very permissive and only requires that redistributions of the software contain a notice file giving credit to the original developers. Bluenog isn't even doing this. And, as you would probably expect they are not contributing back to Hippo either.

Bluenog is clearly in violation of Hippo's licensing terms so it may not matter what license Hippo is distributed under, but it did get me thinking about licenses again. The Apache Software License has been used very successfully for infrastructural components like the famous Apache HTTP Server and all those great Java frameworks and components. The key benefit there is achieving broad adoption. The terms are so generous that there is virtually no downside to including an Apache licensed component in your software. Adoption is a good thing for frameworks and components because lots of users help find bugs and help the project move forward. Even if a very small percentage of developers contribute back, the scale of the user base translates into a lot of support. This low barrier to adoption is particularly good for reference implementations of standards. Tomcat, Slide, and JackRabbit were all critical to the success of the standards they promoted.

As good as the ASL is for components and frameworks, I question its efficacy for business applications. Business applications, like Hippo, compete in a different market than infrastructure. They are going after a smaller (higher touch) install base and they are more actively competing against other products. Business applications need to innovate and differentiate from their competitors while infrastructure wants to be stable and standard. The potential for free-riders to undermine your investment to be unique is too great. This is why most other CMS on the market are licensed under the GPL or a similar license.

From a consumer perspective, it feels like Bluenog customers are getting ripped off. They are buying a software application that should be free. Customers are essentially paying Bluenog to ask questions on the Hippo mailing list that Hippo and the community are answering for free. It feels like Bluenog's refusal to acknowledge Hippo is an attempt to protect this arbitrage. Had customers worked directly with Hippo, they would not only save money, they would also know that Hippo has an entirely new product: Hippo CMS 7 that is a ground up rewrite from the 6.x series that Bluenog forked. I do think that this issue will eventually be worked out. Bluenog will probably not be able to continue practicing business in this manner: even if lawyers don't get involved. But, as you can probably tell, this drama does rankle my developer and open source sensibilities.

Jul 01, 2009

One of the primary functions of a web content management system is separating content from layout. Authors create semi-structured content in a display-neutral format and then the presentation templates transform that content to web pages for regular browsers, mobile browsers, RSS feeds, email, and print. As most readers of this blog know, this separation introduces big efficiencies in re-use: content is managed in one place and appears in many places and in many formats. But this magic does not come for free. Someone has to build presentation templates that render the content and that person needs to have developer skills. The template developer needs to know HTML and the special templating syntax to retrieve and format content from the repository. The developer also needs know how to test software for all the different conditions that it will encounter: different browsers; content with extreme values in any of its elements (like a really long title or a missing summary); and even high traffic load. Because one piece of code can potentially affect every page on the site, testing is important and it needs to be done in a safe place. The same thing goes for all sorts of other software configurations.

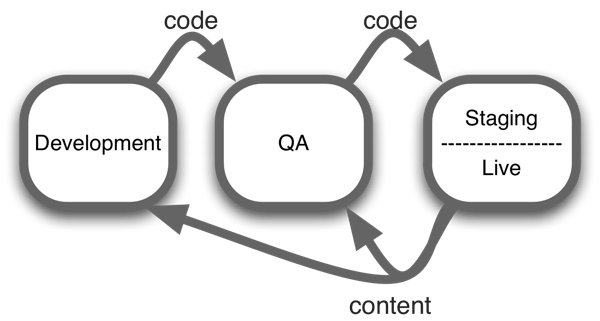

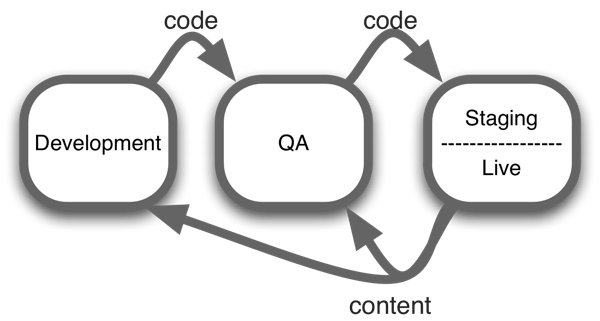

Essentially, the role of the static webmaster has been broken into two: the content contributors (that no longer need to be technical) and the developer (who needs to be more skilled than your average DreamWeaver jockey). It also breaks up the management of the site into two lifecycles: the content lifecycle and the code/configuration lifecycle. The production instance of the CMS itself is designed to support the content lifecycle. CMS have workflow functionality to manage the state of an asset from draft through published and to archived. They have preview functionality so that only contributors can see content that has not been published. Some CMS are designed sot that the development lifecycle can also occur in the production instance. This is usually done by creating workspaces or sandboxes — essentially treating code like another category of content. To be sure, you still want to have a QA instance of the system so you can test software upgrades (of the core) before applying them to your live site. In most CMS, however, developers work on separate environments (either individual developer environments and a code staging area or a shared development environment) and not the live, productive instance of the CMS.

While the content and the software lifecycles are de-coupled, they are interdependent. Developers need realistic content to develop and test code. The content relies on the code to define and display itself. There are lots of situations where these aspects get tangled. For example, when a new field is added to a content type it needs to be populated (sometimes manually) and the presentation templates need to be modified to display it. There are also cases where the line between content and code starts to blur: contributors style their content with CSS classes that are defined in style sheets; contributors can embed a tag that calls some additional display logic (like inserting a re-usable display component); contributors can build web forms that need to be submitted to code that does something with the information.

The standard approach for managing these interdependencies is what I call "code forward, content backward." Code and configuration is developed in a development environment and tested in a QA environment. When it is ready, it is deployed to production. Content is developed and previewed in the production instance that contains the staging (or preview) and live content states. Periodically, content should be published backwards to the development and QA instances so that testing can be realistic as possible. In cases where the code/configuration and content are so tightly coupled (like when you need to break one field into two), you may need to export production content to the QA instance where you do some content transformation and test it with the newest code and then push that content and code back to production at the same time. When you do this, just make sure that you don't have anyone adding content to production because it will be overwritten or (worse) cause some kind of corruption.

Different CMS handle the migration of code and content in different ways. Some provide nice graphical utilities to export configurations from instance of the application to another. In other products, there are ways to manually transfer the settings as a collection of files. Some products don't support this at all so you have to manually repeat the same steps on each environment you are managing. When you are evaluating CMS products, keep these requirements in mind. Otherwise, you will be in for a surprise as you near the launch date of your new website and you need to fix bugs on live content.