Doubt

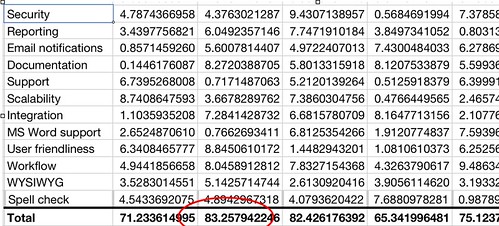

After collecting requirements, the second most difficult component of a CMS selection is taking all the information that was gathered during the evaluation phase and using it to make a decision. This is where people get crazy with spreadsheets and scoring in the hopes that math will somehow heroically make a complicated and confusing (and, lets face it, subjective) decision obvious and irrefutable. The process looks something like this... There are a bunch of selection criteria. People rate the products on each criterion. People weight the criteria. You do some multiplication and addition and out comes some very quantitative looking numbers. Nothing looks more convincing than a score where one option has more points than another. But, users don't necessarily want to use a system just because it has the highest cumulative, weighted score. They want to use a system that helps them efficiently get their jobs done while introducing the fewest number of annoyances. if the measurement of accuracy is the overall satisfaction with the solution, this method is extremely faulty.

There are several reasons why the matrix scoring method fails to accurately select the right solution. First, the rating and weighting wind up being very subjective and arbitrary. Veterans of this approach know this to be true when the they remember the feeling of not knowing what to put down or wanting to change their score when they see another product or have more coffee. Second, the final score hides information that is important to the users. A typical example is where a user finds a very important (to him) feature totally unusable but that is overshadowed by excellent ratings in a bunch of less important features. Usually you can't correct this with weightings - especially if there are lots of selection criteria. You can't discuss trade-offs and compromises if you are just working with total scores. Lastly, criteria tend to be of unequal granularity. How can a broad criteria like "usability" be compared with something as specific as "SSL on the login page?"

I take a different approach to the decision making process. Instead of forcing the selection committee into making numerical ratings, I ask them to list their doubts with each solution. Examples of doubts are:

-

a concern that the feature would not support a specific task

-

unnecessary complexity or awkward behavior in doing a specific task

-

an unsatisfactory explanation by the supplier about how a feature worked

-

doubt about the vendor's stability or ability to support the customer

-

a potential technical incompatibility with the legacy infrastructure

Each of these doubts are investigated as whether they are valid (that is, if it was a misunderstanding or oversight), if there is a suitable work-around, or if there is a reasonable compromise. Through some facilitated sessions, we work through comparing the relative weaknesses of the competing solutions and determining what is tolerable. Follow up demos and calls with the vendors are scheduled and executed. Ultimately, the solution with the fewest legitimate and significant concerns wins. Facilitating these sessions is not as easy as simply reporting matrix scores but I think that it is good that people put some real intellectual energy into making such an important and complex choice.

At first glance, this system seems designed for selecting the lesser of evils and to some extent that is true - there is no such thing as a perfect solution and there will always be compromises (I should note here that there is an option of selecting nothing if no solution is good enough) - but it is really no worse than a numerical system that decides a 5 out of 1000 score is better than 3 out of a 1000. The benefit of the doubt technique is that it keeps the focus on things that have real impact on users and forces users to think through the implications of specific aspects of the solution. This is better than having a user register their concern as low numerical score and then just moving on. A secondary benefit selection committee members learn about their needs and software features as they watch demos and their selection criteria becomes more sophisticated. This approach allows potentially important information to enter the decision making process at any time. Also, after the product is selected, the selection committee can all clearly verbalize the reason behind the decision. If there is a complaint about the implemented solution, a selection committee member can say that they identified it as a concern and then explain the plan to lessen the impact.

I will be discussing this technique as well as all the other components of my CMS selection methodology in my "How to Select a Web Content Management System" workshop at the upcoming Gilbane Conference in San Francisco (June 2-5, 2009). Register before April 28th and save $200 off. Sign up for the full conference package and get an iPhone Touch.